Unit ⑧ Letter #20246

We decided to open up to our wider network and share a selection of our resources with our colleagues and followers. 🦾

Dear Unit ⑧ friends,

We have been internally exchanging readings for a while, sharing links we find relevant for our development as a small organization, looking for meaningful directions to head to, and keen to keep up-to-date in the realm of technology. We will cover topics from legal regulations of Blockchain and AI to the nature of consciousness and what it entails, from a technical, political, ethical, and artistic point of view. Here are some links to the articles we paid attention to these past weeks, we hope you enjoy them!

Vitalik on Politics

In one of his last blog entries, Ethereum founder Vitalik Buterin states he doesn’t want us to vote for certain political candidates just because they support crypto. He alleges that sometimes these politicians exploit the topic to gain votes, even though they are completely misaligned with the principles that originally drove the development of cryptocurrency. Buterin enumerates the original principles of blockchain and why it is essential to stick to them when considering who to vote.

Leopold Aschenbrenner

Much has been discussed and written about Leopold Aschenbrenner, now an AGI investment firm’s founder.

In an interview with Dwarkesh Patel, former OpenAI’s safety researcher Leon Aschenbrenner talks about the trillion-dollar cluster, Artificial General Intelligence (AGI), CCP espionage at AI labs, leaving OpenAI and starting an investment firm, among other topics. These topics are presented in depth in a paper he published at the Situational Awareness’ website, in which he states that the trajectory from advanced AI systems to AGI, and then to superintelligence is likely by 2027.

In his Substack entry, Zvi Mowshowitz cuts the length of Aschenbrenner’s 165-page paper by ~80%. Mowshowitz points to quotes and graphs from the paper explaining the developments in AI technology that could lead to the creation of AGI by 2027, potentially transforming all sectors of society while posing significant challenges and opportunities.

The notion of Situational Awareness in Large Language Models was described by Simon Möller in his article for Less Wrong, focusing on specific model instances, especially deployed ones like GPT and Sydney, and defining this notion as a spectrum. AI’s understand its environment and state is crucial for tasks such as avoiding deceptive alignment and can help manage risks associated with AI’s ability to act with apparent autonomy and intent.

For Decrypt, Jose Antonio Lanz reports Leopold Aschenbrenner’s critical approach to OpenAI and its insufficient safety measures, as they prioritize rapid growth over security. This has opened up broader debates within the AI community about safety, transparency, and corporate governance.

In his Substack entry for AI Supremacy, Michael Spencer states that personalities at OpenAI are significant proponents of AGI, which can sometimes overshadow critical discourse and concerns surrounding the technology's rapid development and implementation. He reports Aschenbrenner’s concerns around AGI and how it is promoted based on personalities showing skepticism toward the tech community.

The Role of AI Metaphors in Shaping Regulations

In her Substack entry, communication researcher Nirit Weiss-Blatt discusses how using metaphors, analogies, and terms to describe artificial intelligence has a significant impact on how policies are crafted and interpreted, necessitating a deliberate and strategic approach to terminology in AI discourse. She thus highlights the need for clarity and strategic use of language in AI policy contexts, as detailed in a report by an Effective Altruism (EA) organization focused on AI existential risks.

AI #69: Nice

Zvi Mowshowitz’s article discusses advancements in AI technology that push boundaries in terms of capabilities and applications, while highlighting significant concerns that remain largely unaddressed regarding safety, ethics, and regulation. Mowshowitz points at Ilya Sutskever, who was the former chief scientist at OpenAI and recently founded the new company “Safe Superintelligence” with Daniel Gross and Daniel Levy, after stepping down from OpenAI’s board. The article goes on to discuss legislative changes in AI regulation, integrating former NSA director Paul Nakasone into OpenAI's board, and various thoughts on AI capabilities and safety frameworks.

GPT-5… now arriving Gate 8, Gate 9, Gate 10

Gary Marcus approaches the numerous optimistic predictions about the launch of GPT-5 with some irony. Since its development and release have been consistently delayed, Marcus compares the anticipation and repeated delays with the launch of GPT-5 to a chaotic airplane landing scene from the movie “Airplane.” Despite the optimistic nature of the predictions, GPT-5's release still appears distant, highlighting a gap between expectations and reality in technological advancements, as well as the overconfidence often seen in technological forecasts.

Podcast

In this New Models Podcast, Caroline Busta reads her text “Hallucinating Sense in the Era of Infinity Content,” an exploration of the role of the 'technical image' in contemporary culture, blending insights from arthouse cinema, fast fashion, and the impact of streaming platforms. Busta delves into how these elements reshape meaning-making in a world inundated with infinite content. The text, published in 2024, refers to Vilém Flusser, Kevin Munger, K Allado-McDowell, Holly Herndon and Mat Dryhurst, Jon Rafman, Dean Kissick, Theo Anthony, Lola Jusidman, Film01, Bernard Stiegler, Olivia Kan-Sperling, Chris Blohm, Niklas Bildstein Zaar, Andreas Grill, Anna Uddenberg, Simon Denny, Trevor Paglen, Joshua Citarella, Jak Ritger, Hari Kunzru, Loretta Fahrenholz, Dorian Electra, Michael Franz, Kolja Reichart, Shein, Lil Internet and the NM Discord.

Books

Kevin Munger’s book “The YouTube Apparatus” explores the concept of "poetic validity," under which YouTube's content creators are more accurately described as 'creatures' shaped by the audience and systemic apparatus, challenging traditional notions of content creation and consumption dynamics. Munger’s premise is based on research analyzing 15 years of data from political channels on YouTube. Monger criticizes the current academic publishing model, citing their experience of paying a significant amount to ensure open access to their work.

Videos

The yearly festival Re:publica took place in Berlin at the end of May. The number of talks summarizing the state of the art in politics and technology in Germany and globally was unfathomable. Fortunately, they released recordings of most of the sessions, allowing them to be reviewed or seen for the first time. Here you can check them all out.

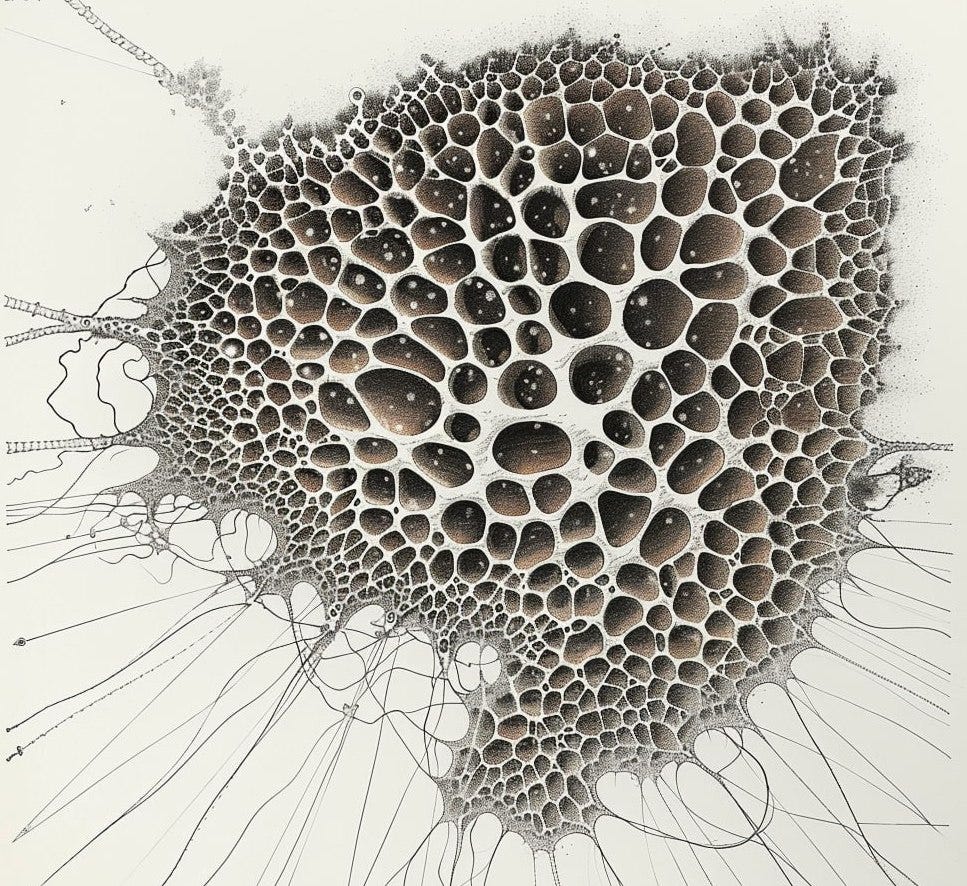

Art

Read our recent post about the Generative AI Summit held in Berlin at the beginning of the month. Our review mentions Le Random, among other artistic initiatives in the ecosystem. Le Random is a digital and generative art library that acknowledges the importance of historical contexts, specifically to classify the current and upcoming creations in the digital sphere. Aiming to illustrate to the public while they learn about it themselves, Le Random is building an editorial platform and an ongoing timeline. They believe that bringing together on-chain art with broader historical contexts may help discover the aesthetic potential of computers, but also promote the artists and their contexts. Their last article, Frieder Nake on “Machinic” Miracles, is about one of digital art’s first-ever practitioners. Nake spoke with Peter Bauman about the origins of algorithmic art and the evolution of his practice.